Addressing and Preventing Wikipedia Vandalism

- Unwelcome and illegitimate edits on Wikipedia are considered vandalism.

- Administrators on Wikipedia have the ability to protect pages and images to prevent vandalism and resolve content disputes.

- Reporting vandalism on Wikipedia is important in maintaining the integrity of the platform, but there are no foolproof preventive measures in place.

No Wikipedia page is safe from vandalism. Wikipedia vandalism is a deliberate attempt to compromise the integrity of a Wikipedia page.

Vandalism threatens the reliability and legitimacy of the platform that many rely on as an authoritative reference. This article will explore the nature of Wikipedia vandalism, its consequences, methods for swiftly reversing damage, challenges in keeping volunteer-led crowdsourced content secure, and the importance of community collaboration to reduce vandalism for the ongoing benefit of all Wikipedia users.

Sections

- What is Wikipedia vandalism?

- Reporting vandalism

- Who commits vandalism

- Consequences of vandalizing Wikipedia

- Tools that prevent vandalism

- Preventing vandalism remains an ongoing challenge

What is Wikipedia vandalism?

Wikipedia is one of the most popular and extensively used online reference platforms, with over 6 million articles across 300 languages contributed by volunteers around the world. This open-access approach has democratized the creation of information, enabling global collaboration. But, the flip side of this openness is Wikipedia’s vulnerability to vandalism, which involves unwanted edits that compromise the integrity of its content.

Vandalism ranges from replacing text with nonsense or inappropriate language to deliberately adding false information.

This threatens the reliability and usefulness of Wikipedia, making vandalism prevention a critical priority for the site and its community. Since Wikipedia has a huge influence on a company’s reputation, vandalism has major consequences for affected companies.

Reporting vandalism

What should you do if you spot vandalism on Wikipedia? Wikipedia has very clear guidelines on how to handle vandalism on the site. Start with reverting the vandalism and move on to the next two options if the same editor continues to vandalize a page.

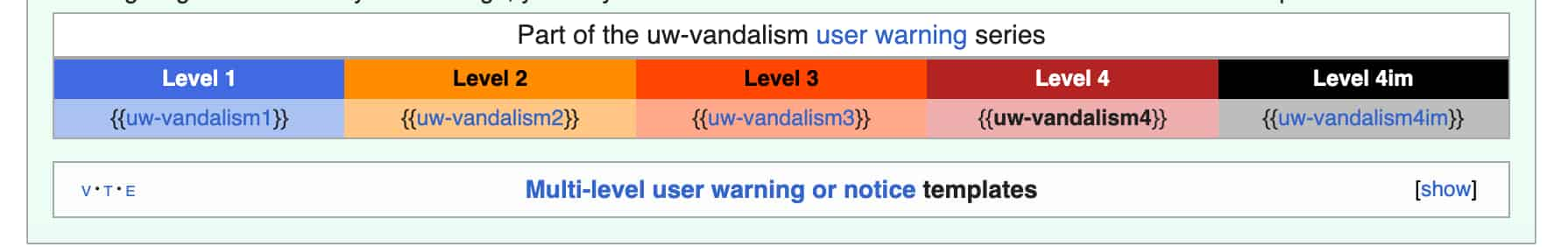

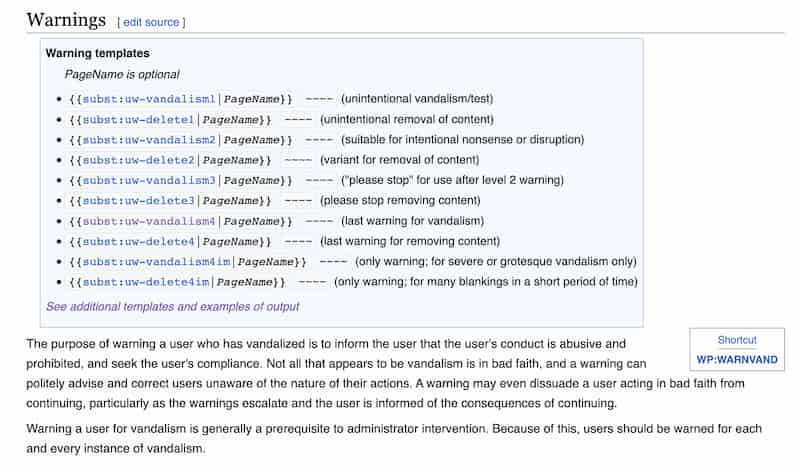

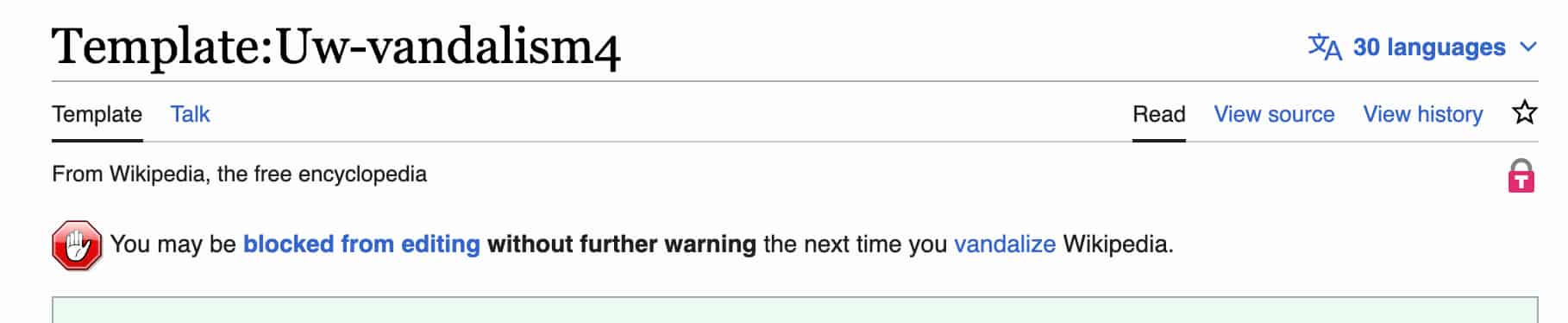

Warn the vandal

Just like you can add templates to Wikipedia pages for various reasons, you can add a template to an editor’s talk page if they are making inappropriate edits. Here’s an overview of the most commonly used warning templates on Wikipedia:

Report vandals

Report vandals who continue to vandalize after having received a final warning. There are different pages for different cases of vandalism:

- Most cases of vandalism can be reported here.

- Use this page for less simple cases.

- Report persistent vandals or sockpuppets here.

Reports help distribute the vandalism mitigation workload. However, Wikipedia lacks strong compulsory identity verification. Anonymity enables valuable contributions from sensitive sources but also allows banned vandals to return through new accounts. So, community vigilance remains vital to address vandalism that slips past preventive systems.

Page protection to deter vandalism

Administrators have access to page protection tools that can prevent editing by unregistered users or new accounts. But protection must be weighed against the impact on legitimate participation. Over-protection can hamper the inclusion Wikipedia strives for. Protection is primarily used on highly visible or frequently vandalized pages. Yet it does not wholly prevent intentional damage, as registered users can still edit protected pages. So, it works best coupled with active vandalism monitoring.

Cross-Wiki vandalism

Because all Wikimedia sites draw from the same pool of editors, vandalism issues on one Wikipedia site can impact others:

- A vandal barred on the English Wikipedia may turn to vandalizing the Spanish or Russian Wikipedias, for example.

- Global administrator collaboration allows the sharing of vandalism reports across projects.

- Site-wide blocks can also restrict vandals from editing other languages.

Relying on the same community of users has advantages but also exposes vulnerabilities like cross-project vandalism.

Who commits vandalism

Bad people. OK, it’s more nuanced than that. Vandalism can originate from unregistered guests editing articles with an IP address or registered Wikipedia accounts. Sometimes editors are paid to vandalize an article, other times they have their own agendas.

Intentional vandalism

Some vandals are highly motivated to cause clearly damaging edits. Motivating factors include:

- Political, business, or personal agendas to spread misinformation

- Financial incentives to promote brands or products

- Ideological beliefs leading to biased edits

- Other factors like reputation sabotage or protest

Unintentional vandalism

However, not all improper edits are intentional vandalism. Some people may make edits perceived as vandalism simply because they don’t yet understand Wikipedia policies and norms. This includes:

- Editors new to Wikipedia

- Inadequately informed editors

- Well-meaning but unconstructive edits often come from unfamiliar users

Evaluating contributions from new users

Not all well-meaning new users understand Wikipedia’s norms initially–it’s complex. So it’s important not to assume every improper edit is vandalism, particularly from IP addresses.Beyond outright damage, edits perceived as un-constructive or self-promotional may come from users unfamiliar with policies.Providing guidance and warnings before blocking new accounts can help well-intentioned users positively contribute. Gentle outreach is key to building a productive editing community.

Why is it important to deal with vandalism quickly?

It’s important to distinguish between purposeful damage and unintentional mistakes. But, regardless of motive, vandalism should be dealt with swiftly to maintain article quality. A nuanced approach is needed when evaluating edits by newer contributors. You can provide warnings and guidance to help mitigate unintentional violations. The goal is to revert clear vandalism without wrongly accusing good-faith editors. Understanding the sources and motivations for vandalism allows more strategic prevention. Ultimately, preserving content integrity requires addressing both intentional and accidental violations through education and prompt response.

Consequences of vandalizing Wikipedia

According to Wikipedia’s terms of service, vandals can be blocked from editing pages temporarily or permanently. Anyone who vandalizes through shared IP addresses may prevent other people on the same network from editing if wide-ranging bans are enacted. Vandalism also makes extra work for editors who monitor recent changes and admins who field vandalism reports while trying to build encyclopedic content. Ultimately, readers suffer from misleading or unreliable information if vandalism isn’t promptly corrected.

Importance of quick reversion

Since Wikipedia is so widely used, pages can be viewed thousands of times in a short period. This makes it critical to revert vandalism as soon as it is identified before false information reaches readers. The “recent changes” feed enables editors to check new edits and roll back clearly damaging ones swiftly. Nuanced cases may require investigation before action.

Overall, the goal is to remove vandalism while preserving valid edits, which underscores the need for judiciousness.

Tools that prevent vandalism

Wikipedia has plenty of features designed to prevent vandalism. Here are a few of them:

- Active monitoring: Wikipedia has an extensive list of vandalism monitoring tools here.

- Protection mechanisms: Wikipedia articles can be temporarily or permanently protected to restrict editing. This is often applied to high-traffic or contentious pages that are more vulnerable to vandalism.

- User registration: Requiring users to register accounts before editing protected pages can act as a deterrent to vandalism. Registered users are often more accountable for their actions.

- Edit reversion: Wikipedia’s “undo” feature allows editors to quickly revert changes, making it relatively easy to correct vandalism.

- Edit filters: Wikipedia employs automated edit filters that can identify and prevent the submission of edits containing potentially harmful content.

- Talk pages: Wikipedia provides discussion pages for every article where editors can communicate, potentially resolving disputes or discussing controversial edits.

- Bots and AI: Wikipedia also harnesses the power of bots and artificial intelligence to assist in the detection and prevention of vandalism.

- Community values: Wikipedia’s emphasis on maintaining a neutral point of view and verifiability encourages a culture of factual, unbiased information, reinforcing the community’s commitment to reliability.

Tactics to reduce vandalism

Making vandalism prevention a priority for Wikipedia and its community involves:

- Monitoring recent changes feeds to identify and revert obvious vandalism quickly.

- Investigating suspicious edits before reverting to avoid wrongly accusing good-faith editors.

- Educating new users on Wikipedia policies to reduce unintentional violations.

- Using page protection tools judiciously to prevent counterproductive over-policing.

- Collaborating across language Wikipedias to address cross-wiki vandalism.

- Relying on the community to report hard-to-catch vandalism.

- Balancing open participation with quality control through guidelines and content oversight.

- Promoting collaborative norms focused on creating the best encyclopedia possible.

There are challenges in preventing vandalism on a constantly growing and crowdsourced platform. However, with shared vigilance, prompt responses, and care in evaluating contributions, the prevalence of vandalism can be reduced.This relies on the efforts of editors, administrators, and all Wikipedia users working together to fulfill the site’s mission as an expansive repository of knowledge.

Preventing vandalism remains an ongoing challenge

Ultimately, addressing vandalism through reactive measures has limits. As an ever-expanding platform dependent on anonymous crowd-sourced participation for growth, fully preventing vandalism on Wikipedia may be unrealistic. Controls must be balanced against openness. But ongoing anti-vandalism efforts can reduce its prevalence and impact. This relies on the diligence of experienced editors orienting newcomers, administrators upholding policies fairly, and everyone working collaboratively to keep improving this online public good.

About the author

Brianne Schaer is a Writer and Editor for Reputation X, an award-winning online reputation management services agency based in California. Brianne has more than seven years of experience creating powerful stories, how-to documentation, SEO articles, and Wikipedia content for brands and individuals. When she s not battling AI content bots, she is cruising around town in her Karmann Ghia. You can see more of her articles here and here.–“

Tags: Wikipedia, Wikipedia Writing.