Can You Spot a Fake Wikipedia Edit? Sleuthing Tips

People try to exploit Wikipedia’s open editing policy to add false information, but the online encyclopedia has tools and volunteer teams to suss them out.

Highlights

- Wikipedia uses powerful tools and a large network of volunteer editors to detect bogus edits.

- Wikipedia edits can be tracked by the editor’s username or IP address.

- False information is often quickly removed from Wikipedia.

- If you see false information on a page, flag the bogus content for a Wikipedia editor to review.

Wikipedia can be a part of your reputation management strategy and a great source of corporate visibility and marketing. But it also comes with some risks because it could include all kinds of information: good and bad. It is, after all, editable by the public.

Although Wikipedia has an impressive approval process and catches many incorrect facts before publishing, it still relies on a network of volunteer writers and editors to detect fake edits and catch people creating Wikipedia pages for their own company or themselves.

To find and report bogus Wikipedia edits, you will need to monitor your Wikipedia page closely and ensure that edits have clear sources. We will explain what you need to know about who can make edits to a Wikipedia page, how to trace edits and the process for disputing the information.

What is Wikipedia vandalism?

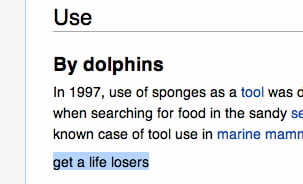

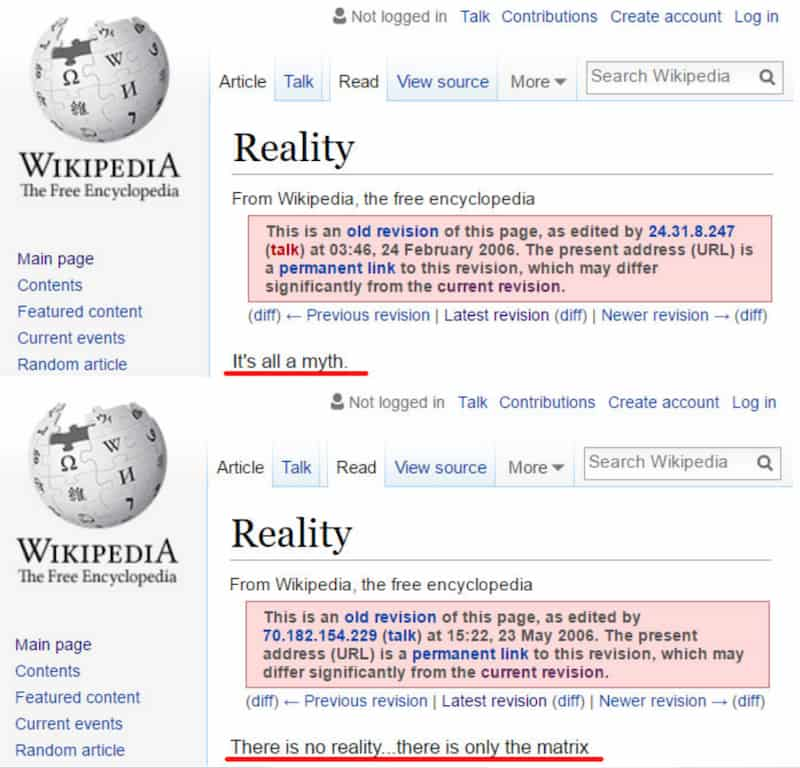

Wikipedia takes its role of providing accurate information seriously – so seriously that it has a name for editing pages with malicious or disruptive intent. It calls this process Wikipedia vandalism.

It outlines vandalism as: “any addition, removal, or modification that is humorous, nonsensical, a hoax, or degrading in any way.”

And once a page has seen too many vandalism attempts, Wikipedia locks it down to only allow its most trusted users to make changes to that page. This helps the platform avoid further misinformation, but it also can make it challenging to correct or update information quickly.

Wikipedia vandalism case study

In 2008, a group of students enrolled at George Mason University fooled Wikipedia’s approval process and fact-checking to alter the life of Edward Owens. Part of the students’ success came from them weaving together real facts with complete falsehoods. They even created sources for their information through YouTube videos and interviews with experts to make the changes look valid.

Once the stunt came to light, Wikipedia’s founder Jimmy Wales was livid and called it an act of digital vandalism. But the stunt brought to light a whole new challenge the online encyclopedia faced: some people will intentionally make changes that lack goodwill.

Humans. Who knew they were capable of such things?

When the class tried to change history again in 2012, they were caught fairly quickly by an astute community on Reddit. This time the students focused on the true story that the original Star-Spangled Banner was stitched together at a brewery in Baltimore, and they tried to tack on a phony history of a made-up beer.

By this time, however, some of Wikipedia’s weaknesses had been brought to light, and fact-checkers knew where to look. The change history on several pages showed that many of the new ‘facts’ had been added very recently and within a short timeframe, arousing suspicion.

With every discovered hoax and a new way of vandalizing Wikipedia, the platform and the public have found new ways to detect and correct information.

How Wikipedia detects bogus edits

For a platform that charges no subscription fees (although that may not always be the case) and leans heavily on volunteer writers and editors, the approval process and screening that Wikipedia accomplishes is actually quite impressive.

Wikipedia uses bots called ClueBot NG to detect bogus edits within milliseconds of someone trying to change a page with faulty information. The bots are impressive at understanding context and whether the information would belong on a page with that type of content.

Other ways Wikipedia detects problem edits may be through stylometry (multiple editors using the same editing or writing style), browser cookies, session IDs, and other browser and IP address-specific indicators to find bogus edits.

In most cases, Wikipedia doesn’t want a user to use more than one account because using more than one account may be a sign of something nefarious going on – i.e. sockpuppetry (discussed below).

Fingerprinting

Fingerprinting is when a browser’s screen resolution, cookies, IP address, computer type, operating system type, and more are used together to identify a person even when their name is not known. Fingerprinting is one of the more intrusive ways marketers identify and worm their way into your psyche, and Wikipedia uses a form of it as well. Stylometry is a form of fingerprinting.

Paid Wikipedia editors find workarounds

Sophisticated paid Wikipedia editors have found ways around fingerprinting, stylometry, and most suspicious editors. To do this, they turn to tools like residential IP addresses, which are IP addresses used by people at home instead of corporate blocks of proxy addresses. They will also use different computers or operating systems, various browsers, spoofed headers, and even GPT-4, which is a type of AI that writes or rewrites text.

Consequently, real editors are banned every day by overzealous Wikipedia editors.

For example, Reputation X was contacted a few months ago by a woman who noticed that Wikipedia was run by white men based mainly in the US and Germany. She decided to become a Wikipedia editor to balance the scales a little. Well, she was banned.

Here’s what happened. After a year of making edits, she’d been accused of being part of a sock puppet farm. The editor that banned her said it was because her editing style was similar to someone else who was actually a paid editor running a sock puppet operation. She contacted many Wikipedia editors to try to get it reversed, begging them to reinstate her account; her pleas fell on deaf ears.

What happened to the woman who called us? She eventually abandoned her attempts to become a female Wikipedia editor. She told us the Wikipedia environment was too toxic.

What are Wikipedia sock puppets?

Using multiple accounts is something Wikipedia considers a form of Sockpuppetry. This is when someone maintains multiple accounts to deceive or for malicious purposes. For example, if a representative of a company created a fake account to praise the company on Wikipedia (making it look like an ‘unbiased third party’ was bestowing the praise), that is a sockpuppet account.

To help identify sock puppets, a select group of Wikipedia editors can use a tool called CheckUser. CheckUser enables users to see the IP address of a logged-in user, something that is normally hidden from most editors, to see if the same IP address has been used by different accounts. The tool also allows the user to view other technical data beyond that, a form of spying known as “fingerprinting“.

Wikipedia also forbids users from borrowing someone else’s account or reviving an old unused account to make a change.

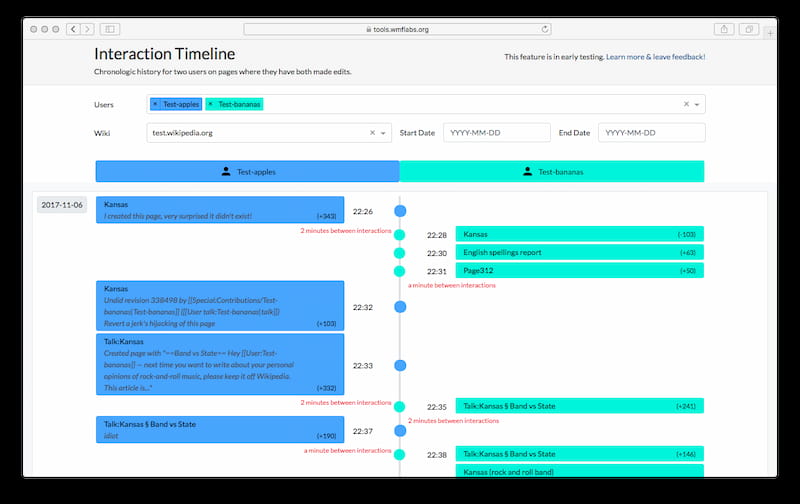

Wikipedia has a process for viewing the interactions between the contributors to analyze their actions and detect issues. This form of tag-teaming can be a sign of a sock puppet farm in action.

For a fascinating read on the mechanics of sock puppet investigations, check out this page.

How to flag bogus Wikipedia information

Anyone can make changes to a Wikipedia article. However, generally speaking, you should not edit a page in which you have a vested interest. Instead of editing your company’s Wikipedia page to fix a mistake, for example, you can flag it as incorrect for a Wikipedia editor to review. During the review process, the flag also informs visitors that the information might not be reliable.

Reputation X has had more than one client asking us for advice after trying the honest approach to Wikipedia editing. To follow the rules, a conflict of interest edit must be identified by the editor. Knowing that, many company representatives wishing to correct incorrect information on their company Wikipedia page, and wishing to follow the rules, will create an account with the name of their company as the username. Guess what – they get their edits reversed, banned, or both. Sadly, this form of honesty is not well received in the world of Wikipedia editors.

Wikipedia views constructive criticism as an important part of the platform. It helps make the content better. So whether you are a novice or a Wikipedia expert, you should feel free to update or flag bogus information; just be aware of the risks. If you have a conflict of interest before making an edit, well… use your best judgment.

Wikipedia vandalism FAQs

Do you need an account to edit Wikipedia?

Wikipedia does allow for anonymous edits, but unregistered users will be prohibited from making changes to pages that regularly see vandalism. Pages that are vandalized regularly will be protected, and only trustworthy Wikipedia accounts will be able to make edits to these pages.

Can Wikipedia edits be traced?

When a user makes a Wikipedia edit, their username and IP address are logged in the system. A user can make anonymous entries, but it’s not possible to entirely hide the user’s identity from Wikipedia.

How can I see who edited my Wikipedia?

You can click on the history tab of an article on Wikipedia to learn who has edited the page. However, this will display usernames for those who were logged in or IP addresses for unregistered users. It’s not an ideal way of finding individual people and gathering information about why they edited a page.

About the author

Kent Campbell is the chief strategist for Reputation X, an award-winning online reputation management agency. He has over 15 years of experience with SEO, Wikipedia editing, review management, and online reputation strategy. Kent has helped celebrities, leaders, executives, and marketing professionals improve the way they are seen online. Kent writes about reputation, SEO, Wikipedia, and PR-related topics.

–

Tags: Wikipedia.