The Big Fat Guide to Mastering Wikipedia’s Editing Rules

Imagine this: you’ve spent hours crafting an edit on Wikipedia—carefully choosing your words, sourcing information from what you believe to be credible references, and hitting that “save” button with a small sense of triumph. Then, barely 24 hours later, your work is gone, erased with a curt explanation buried in talk page jargon. Frustrating? Absolutely. You’re left wondering what invisible rule you unknowingly broke and how people manage to navigate Wikipedia’s labyrinth of policies without pulling their hair out.

Here’s the thing—editing Wikipedia isn’t just about good intentions or even good research. It’s about mastering a very specific, sometimes finicky, set of guidelines that can feel like trying to read a map written in code. For marketers, PR professionals, or anyone tasked with managing how a brand or individual is represented online, understanding these rules isn’t just helpful—it’s essential. Wikipedia’s reputation as a first-stop information source makes it a powerful ally when done right, but it’s also an unforgiving space for those who misstep.

So how do you bridge the gap between your goals and Wikipedia’s strict standards? That’s exactly what we’re diving into. We’re breaking down the rules, translating the jargon, and giving you practical tips to confidently navigate the platform—without losing your edits, your patience, or your professional credibility in the process. Let’s get into it.

Table of Contents

- Key Takeaways

- Understanding Wikipedia’s Content Policies

- Mastering Wikipedia Editing: What is Original Research

- Mastering Wikipedia’s Editing Rules: Verifiability Simplified

- Mastering Wikipedia Edits: Staying Neutral and Compliant

- Mastering Wikipedia’s Editing Rules: COI Explained

- Additional Best Practices for Editing Within Wikipedia’s Rules

- Tools and Resources for Mastering Wikipedia Editing Rules

Key Takeaways

- Wikipedia isn’t a free-for-all, it’s a carefully curated symphony of rules. Think of editing on Wikipedia like playing a musical instrument. You can’t just jam out your own tune; you’ve got to follow the sheet music—or in this case, the policies. Wikipedia’s holy trinity of content guidelines—Neutrality, Verifiability, and No Original Research—are the foundation of everything.

- Original thoughts are great…except on Wikipedia. One of the platform’s quirks is its allergy to originality when it comes to research. Adding a groundbreaking idea or unpublished analysis? That’s a no-go. This article will show you how to spot—and steer clear of—accidental “original research” pitfalls in your edits.

- Not every source is created equal. Using your favorite blog post or press release as a reference might seem harmless, but Wikipedia’s standards for reliable sources are strict. You’ll learn how to identify the gold-standard references that meet its verifiability requirement and avoid the edits that get axed for sourcing problems.

- Neutrality isn’t optional—it’s the law of the land. Wikipedia has a neutrality radar that’s always on high alert. The guide delves into strategies for writing compliant, unbiased entries while spotting edits that could raise red flags. Staying neutral protects both your contributions and your credibility.

- Conflicts of interest (COI) are like land mines—handle with care. Whether you’re editing for your employer, a client, or even yourself, COI guidelines can feel like a tightrope walk. This guide offers practical advice on how to navigate COI situations ethically and collaboratively with Wikipedia’s editing community.

- Talk pages are where the magic—and sometimes the mess—happens. These behind-the-scenes discussion boards are often overlooked by newcomers, but they’re key to understanding and influencing the communal decisions behind Wikipedia edits. We’ll show you how to effectively engage in these spaces without getting lost or misunderstood.

- Think of Wikipedia as a team sport, not an individual race. Decisions are often reached through consensus, not solo efforts. Gaining a clearer understanding of Wikipedia’s quirky consensus model will help you work with, rather than against, other contributors.

- Boost your game with the right tools and resources. From monitoring article changes to seeking guidance from experienced editors, there’s an entire toolkit you can leverage. This guide rounds up the best options to help you stay informed and engaged as you refine your Wikipedia editing expertise.

Mastering Wikipedia’s rules doesn’t just protect your edits—it gives you the confidence to create content that sticks. With a solid grasp of the platform’s guidelines, you’ll not only minimize frustration but also contribute meaningfully to one of the internet’s most trusted spaces. Ready to dive in? Let’s get started!

Understanding Wikipedia’s Content Policies

Wikipedia operates on a foundation of three core content policies that function as its guiding principles: neutrality, verifiability, and the prohibition of original research.

These policies are non-negotiable and interdependent, meaning they collectively ensure the encyclopedia remains reliable, well-sourced, and free of bias. If you’ve ever wondered why Wikipedia doesn’t read like a Reddit debate or a personal blog, these policies are the reason. They aren’t just rules—they’re the backbone of how Wikipedia maintains its credibility as a source of information.

When you dig into these policies, it becomes clear that they’re not just there for show; they have practical, real-world applications that you’ll encounter constantly if you’re editing on the platform. Let’s take neutrality, for example. At its core, the neutrality policy is about balance—it mandates that articles don’t promote one perspective over another but present all significant viewpoints fairly. This doesn’t mean giving equal weight to every opinion, though. A fringe theory with no basis in reliable research doesn’t get the same treatment as a well-regarded scientific consensus. This distinction is key, and one that Wikipedia editors consistently enforce to avoid turning articles into battlegrounds for advocacy.

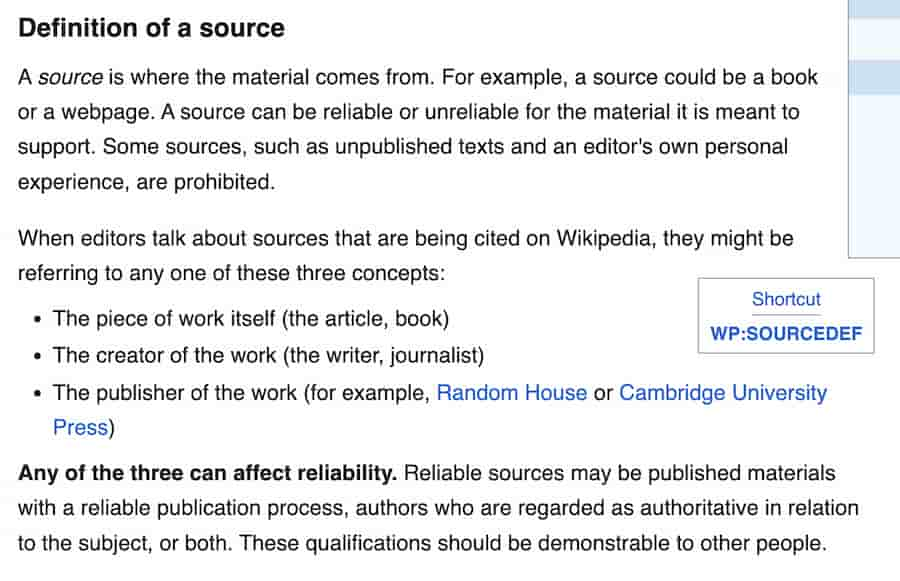

Verifiability is another cornerstone. It’s not enough to believe something is true or even personally know it to be true—on Wikipedia, every claim needs to be backed by a reliable source. This is where citations come into play, and why you’ll often see those little superscript numbers in articles leading to books, journals, or credible websites. Verifiability saves Wikipedia from being a platform for unchecked assertions and forces contributors to root their edits in evidence. It’s a check against misinformation but also a reminder that no edit exists in isolation; everything must be traceable back to a vetted source.

Finally, there’s the policy against original research. Wikipedia isn’t the place to break news, publish a personal analysis, or introduce an undiscovered concept. This policy often trips up well-meaning editors who may have unique insights they’re eager to share, but Wikipedia draws a firm line: it’s a summary of existing, published knowledge, not a forum for creating something new. It’s like joining a book club but sticking to discussing the book rather than critiquing how the author could’ve written it differently.

What’s fascinating is how intertwined these policies are. One naturally supports and reinforces the others. For instance, verifiability complements neutrality; without reliable sources, there’s no way to ensure an article adequately reflects opposing viewpoints. Similarly, banning original research keeps the platform focused on documenting established information rather than veering into speculative or biased territory. As you start editing, you’ll see how these three principles operate in harmony to create a cohesive editing environment.

Understanding these core policies isn’t just about following rules—it’s about recognizing the values that make Wikipedia a unique space for global knowledge-sharing. Once you grasp these essentials, it’s easier to navigate the platform’s expectations and contribute edits that hold up to community scrutiny.

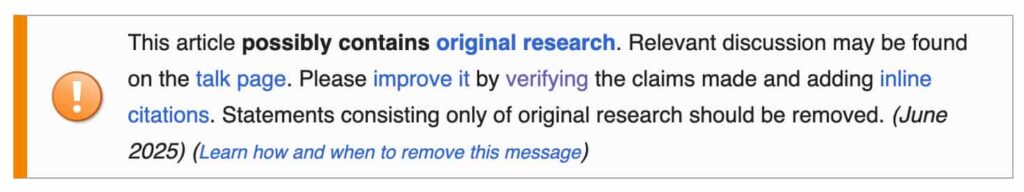

Mastering Wikipedia Editing: What is Original Research

If you’ve ever dabbled in Wikipedia editing, you’ve probably heard the term “original research” thrown around, often with an air of caution. It’s a cornerstone of the platform’s content policies, yet it can feel frustratingly abstract when you’re diving into guidelines for the first time. So, what does Wikipedia mean by “original research”? At its core, it’s all about protecting the neutrality and credibility of the encyclopedia by ensuring that everything on Wikipedia is backed by verifiable, published sources—not the personal insights or interpretations of its contributors. Let’s look closer at why this matters, how it’s defined, and, most importantly, how to steer clear of crossing this boundary in your edits.

Definition of Original Research

Wikipedia defines original research, or OR, as material that has not been published in a reliable source.

Essentially, this encompasses content that hasn’t undergone some form of external scrutiny or review before appearing on Wikipedia. Original research isn’t just about publishing a groundbreaking study on an obscure topic; it can be as subtle as introducing your interpretation of existing data or drawing conclusions that are not explicitly stated in the sources you’re using. The platform is built on the principle of summarizing what’s already out there in a way that remains neutral and factual, so new, unsourced ideas—no matter how well-intentioned or insightful—can’t find a home on Wikipedia.

This is where the challenges for enthusiastic editors tend to pop up. It’s easy, for example, to view yourself as adding value by synthesizing information from different sources or providing detailed commentary that no one else has thought to write. But if your wording or emphasis strays beyond what the sources explicitly say, Wikipedia considers that your own analysis—and that’s where you tip into original research.

Examples of What Constitutes Original Research

Sometimes the concept of original research feels abstract until you see it in action. Let’s consider a scenario: you’re working on improving the Wikipedia page for a scientific discovery. You find multiple sources discussing the breakthrough, but none of them explicitly connect the implications to a current political debate. Drawing that connection on your own, even if it might seem logical or helpful, would constitute original research because the conclusion didn’t come from the published sources you’re citing. Similarly, suppose you’re editing a historical figure’s page and use primary sources, like personal letters or manuscripts, to craft new narrative insights. Unless a secondary source has already analyzed and interpreted those materials in the way you’re suggesting, any analysis you add becomes original research.

Another common example is when editors attempt to combine statistics in creative ways. For instance, taking population growth data from two sources and calculating an additional trend line to project future numbers might feel like a helpful addition—but unless your secondary sources make that same calculation, your synthesized result is inherently original research. Even the way you group or juxtapose information can inadvertently step into this territory if the connections or interpretations are uniquely yours rather than something clearly verified in a reliable source.

How to Avoid Original Research in Your Edits

Thankfully, avoiding original research doesn’t require a complete overhaul of your editing instincts—it just demands a strong commitment to rigorous sourcing and a focus on staying true to your materials. The first rule of thumb is to let your sources do the “talking” for you. This means summarizing their content without embellishing, speculating, or adding context beyond what is plainly in the text. If you find yourself writing something that feels like an interpretation, pause and ask, “Does this conclusion appear explicitly in the source I’m citing?” If the answer is no, it’s worth revisiting your edit.

Another useful habit is working from secondary sources rather than primary ones, especially if you’re new to Wikipedia editing. While primary sources—such as historical documents, direct interview transcripts, or raw datasets—hold immense value, Wikipedia relies on secondary sources to interpret them. That’s because secondary sources have typically been vetted by experts or peers and provide the kind of contextual analysis that Wikipedia deemphasizes in its own articles. For example, citing a scholarly article that discusses the impact of a historical treaty is far safer than using the treaty’s text itself unless you can find a secondary source that provides the same interpretation.

Think about transparency as well. Always ensure that your edit clearly reflects the source you’re using without paraphrasing in a way that adds unintended meaning. For instance, don’t “clean up” overly academic language into something more dramatic or opinionated for the sake of readability; it inadvertently risks introducing bias or distortion.

Lastly, lean into Wikipedia’s collaborative structure. The talk pages on each article are invaluable when working on tricky subjects that walk the fine line between summary and original research. Discussing your approach with other contributors helps build consensus and ensures your edits align with Wikipedia’s rules. It’s also a good way to learn from more experienced editors who can flag potential pitfalls before your content is reverted.

By staying anchored in verifiable sources and resisting the urge to infuse your own knowledge or perspective, you’re not just avoiding original research—you’re actively contributing to the trustworthiness and reliability of Wikipedia as a resource for millions worldwide.

Mastering Wikipedia’s Editing Rules: Verifiability Simplified

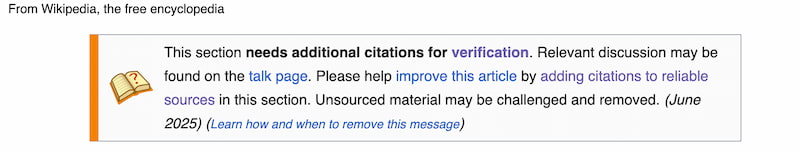

If you’ve ever taken a peek behind the scenes at Wikipedia’s vast collection of articles, one key principle keeps it all afloat: verifiability. It’s not just a rule buried deep in Wikipedia’s policy pages—it’s the backbone of what makes Wikipedia a reliable resource (when used thoughtfully, of course). But what does “verifiability” actually mean in practical terms? Let’s break it down.

Think of verifiability as Wikipedia’s commitment to ensuring every fact and claim on the platform can be checked and traced back to a credible, published source. It’s not about whether something is objectively true—because defining “truth” can get tricky in an encyclopedia built collaboratively. Instead, the question boils down to whether a reasonable editor could verify the information by consulting a reputable source. When you look at it this way, verifiability becomes less intimidating and more like a useful compass, guiding what gets included on Wikipedia and what doesn’t.

The Concept of Verifiability in Wikipedia

Wikipedia defines verifiability simply: information must be backed by sources that readers can consult to confirm accuracy.

The mantra here is, “verifiability, not truth.” This doesn’t mean Wikipedia doesn’t care about accuracy—it does. But the goal is to keep the playing field even by requiring every assertion, no matter how seemingly obvious, to link back to a clear and accessible foundation. For instance, even if everyone agrees that the Eiffel Tower is one of the most famous landmarks in the world, the statement still needs a citation from a reliable source to remain on Wikipedia.

This standard creates a chain of accountability. It ensures editors aren’t slipping in their own opinions or unverifiable claims, and it makes edits easier for other contributors to review. The platform relies on this shared assurance: nothing enters Wikipedia without the ability to check that it came from somewhere credible.

Sources Considered Reliable for Verifiability

Not every source that looks professional on the surface is automatically suitable for Wikipedia. Verifiability hinges heavily on the idea of “reliable sources.” These are typically secondary sources—think of well-established newspapers, academic journals, peer-reviewed studies, or books put out by reputable publishers. Independent reporting and expert analysis are what Wikipedia holds as gold-standard citations. For example, citing an article from The New York Times or Nature carries more weight than using a quick blog post from an unknown website.

Here’s a tip: Wikipedia editors treat self-published sources, like personal blogs or websites that people curate themselves, with extreme caution. Such sources are generally avoided unless the person behind them is a recognized expert writing within their specific field. For instance, while a renowned scientist’s blog on quantum mechanics might work in certain cases, a random Reddit thread discussing the same topic definitely won’t.

It’s also worth noting that primary sources—like government reports, court documents, or firsthand accounts—can be used sparingly but with care. Wikipedia favors secondary sources because they tend to provide more context, analysis, and perspective, which aligns with its role as a tertiary resource summarizing existing knowledge.

Common Mistakes to Avoid When Ensuring Verifiability

Verifiability, while foundational, isn’t free from pitfalls. One common error is assuming that just because something is widely known or shared across social media, it must be verifiable. Wikipedia doesn’t operate on the “everyone knows this” principle. Editors need to dig deeper and find sources that meet the platform’s reliability standards—if a claim is worth including, a credible source should be able to back it up.

Another frequent misstep involves using cherry-picked sources that push a particular narrative. For example, citing an advocacy organization to support a controversial claim might not work if the source lacks impartiality. Wikipedia draws a clear line: reliable sources should be as neutral and well-regarded as possible.

Lastly, pay close attention to accessibility when choosing citations. Sources that are behind paywalls or extremely niche (like obscure academic publications) can be problematic. While these aren’t outright forbidden, editors are encouraged to prioritize sources that are either free to access or broadly available. After all, Wikipedia thrives on transparency—not just for editors, but for its readers.

By approaching verifiability as a guiding principle, rather than a roadblock, you’re better equipped to navigate Wikipedia’s editing rules. It’s less about jumping through hoops and more about building trust—trust among editors and trust with the millions of people who rely on Wikipedia every day.

Mastering Wikipedia Edits: Staying Neutral and Compliant

At its core, Wikipedia thrives on one of its most important guiding principles: neutrality. This concept, encapsulated in the Neutral Point of View (NPOV) policy, is what ensures Wikipedia doesn’t just become a collection of opinions or promotional material. Instead, it remains an ever-evolving repository of verified information that serves all readers, not just a specific audience or interest group. But while the idea of neutrality might seem straightforward on the surface, actively maintaining it in your edits can feel like walking a tightrope, especially when you’re dealing with contentious topics or areas where you might unknowingly carry your own biases.

Understanding Wikipedia’s Neutral Point of View (NPOV) Policy

The NPOV policy isn’t just a suggestion—it’s a non-negotiable rule that applies to every single entry, whether the topic in question is historical events, scientific theories, or a local business’s profile. Simply put, Wikipedia expects content to be written in a way that does not promote any viewpoint, ideology, or agenda. It’s about presenting facts fairly and proportionally, based on reliable sources, while steering clear of language that carries implicit judgment or favoritism.

Let’s break this down: imagine you’re editing a page about climate change. Even if the scientific consensus overwhelmingly supports certain conclusions, neutrality doesn’t mean giving undue space to fringe theories for the sake of balance. However, it does require that those alternative viewpoints—if covered by credible sources—are mentioned appropriately, without dismissive language or an unbalanced amount of weight. Neutrality is not about “equal airtime”—it’s about fair representation based on the strength of the evidence.

Tips to Maintain Neutrality in Your Edits

You might wonder how to ensure your edits align with the NPOV standard while still contributing meaningful content. A good place to start is by continuously grounding yourself in Wikipedia’s commitment to verifiable accuracy, rather than personal beliefs or outside motivations. Here are some actionable strategies to keep your edits compliant with the neutrality policy:

- Stick to reliable sources: If you’re relying on sensational headlines, opinion pieces, or sources with clear biases, your edit is likely to become problematic. Always check that your references align with Wikipedia’s criteria for reliability—this means peer-reviewed journals, reputable news outlets, or expert consensus, depending on the subject.

- Use dispassionate language: Words have weight. Terms that carry emotional or judgmental tones (like “obviously,” “revolutionary,” or even “controversial” when it’s not explained) can wreck the neutrality of an entry. Replacing such language with matter-of-fact descriptions grounded in your sources helps maintain objectivity.

- Balance perspectives carefully: One of the trickiest parts of editing involves determining how much weight a particular viewpoint should receive. Wikipedia uses a principle called “due weight,” which asks editors to reflect the prominence of an idea based on available and reliable sources. For instance, if 95% of sources support a certain perspective and only 5% argue against it, it would be misleading to present these views as though they’re equally supported or significant.

- Be self-critical about bias: Even when we don’t intend to, our prejudices—whether political, cultural, or personal—can creep into our writing. Consider revisiting your edits after a short break with fresh eyes, or using Wikipedia’s Talk Pages to invite feedback from fellow editors who can point out areas where you might unintentionally tip the scale.

Consequences of Violating the Neutrality Rule

Failing to adhere to neutrality can lead to far-reaching repercussions, not just for an individual editor but for the integrity of Wikipedia as a whole. Blatantly promotional language, for example, is a red flag for other editors and administrators, often resulting in your edits being swiftly reverted. If the violation is severe or recurrent—such as persistent bias in politically sensitive articles—it could even lead to temporary or permanent editing restrictions.

Moreover, neutrality violations can harm Wikipedia’s reputation as a trusted information source. For example, if a company’s article reads too much like marketing copy filled with glowing praise, readers might question whether it’s a robust, unbiased encyclopedia or a platform susceptible to outside manipulation. This erosion of trust impacts everyone—from casual readers to the dedicated editor community.

Ultimately, mastering neutrality isn’t just about meeting a checkbox requirement—it’s about contributing to Wikipedia’s credibility and ensuring your work becomes a lasting part of a global knowledge system.

Mastering Wikipedia’s Editing Rules: COI Explained

Let’s start with the basics: COI, or Conflict of Interest, is a term you’ll see often if you’re looking to make any sort of contribution to Wikipedia. It might sound like a legal concept or something reserved for corporate boardrooms, but on Wikipedia, COI is all about preserving the integrity of the platform by discouraging editors from making changes that could be biased by personal, financial, or professional loyalties.

At its core, Wikipedia operates on trust. The platform relies on its contributors to build, edit, and refine articles in a way that’s factual, neutral, and community-driven. When someone with a stake in the topic—whether it’s a company employee editing the company’s Wikipedia page, a publicist adding accolades to a celebrity’s profile, or even just a diehard fan describing their favorite video game—makes an edit, that trust can slip. The concern isn’t that these editors have bad intentions; instead, it’s that their involvement creates the perception of bias, which Wikipedia tries very hard to avoid.

What Is a Conflict of Interest (COI) on Wikipedia?

So, what counts as a genuine conflict of interest? According to Wikipedia’s guidelines, it’s not just about obvious ties, like working for a company or being related to someone mentioned in an article. It can also apply to situations where you have an indirect but significant interest in how the topic is presented. For instance, running a blog or managing social media for a niche fan community might make you feel compelled to fine-tune an article in a way that aligns with your group’s views—the kind of edits that could subtly skew the information, even unintentionally.

This doesn’t mean you’re barred from editing pages related to topics you care about. What it does mean, however, is that you need to tread carefully. Recognizing where your personal involvement might introduce bias is the first step to mastering COI rules.

Recognizing and Declaring COIs in Your Edits

Transparency is the golden rule when it comes to handling a potential COI on Wikipedia. If you feel your connection to the subject might cloud your neutrality—or if others might perceive it that way—it’s important to disclose the relationship. Think of it as leveling with the community about your position upfront, so they can assess your contributions with full context.

Let’s say you’re tasked with updating your employer’s Wikipedia page. The most ethical approach isn’t to dive straight into the “edit” button but rather to use the article’s Talk page to propose changes. By doing so, you’re allowing impartial editors to review and implement the most relevant and accurate information. You might say something like, “Hi, I work for XYZ Company, and I noticed the current article is missing some updated stats from this independent report [link provided].” Declaring your role is a way to build credibility, demonstrating to the community that you’re following the rules while still engaging as a participant.

Even if you’re not directly editing but sharing content with someone else who contributes on your behalf, you’re still responsible for disclosing that connection. Wikipedia editors are an attentive bunch, and failing to be upfront about a COI can backfire, leaving your edits—or worse, your entire account—under scrutiny for potential bias.

Strategies to Manage COI Situations

So here’s the challenge: how do you contribute meaningfully and ethically when you have a COI? One strategy is to approach edits not as a direct participant but as someone who suggests improvements. As mentioned earlier, the Talk page is your best friend. If you can clearly outline the changes needed, back them up with reliable, third-party sources, and explain why they’d improve the content, other editors are likely to step in to implement them—if your case is solid.

Another approach is reframing how you view Wikipedia contributions altogether. Instead of trying to reflect positively on a subject you’re connected to, focus on improving the article according to its gaps. Are there facts missing? Could an existing claim use better citations? These kinds of contributions not only lower the perceived conflict but also align your efforts with Wikipedia’s commitment to accurate, verifiable content instead of advocacy.

A good example is how university faculty have worked with their students on adding citations and expanding stubs for topics where they have expertise, often steering entirely clear of pages where their work or affiliations are directly involved. By keeping their focus on general improvements and avoiding high-stakes articles, they keep COI concerns in check while still making valuable contributions to the platform.

One final note here: patience is key. Edits proposed or made with a COI may face extra scrutiny, and that’s okay. Trust the process, collaborate with the editing community, and remember that the end goal is an article that is complete, credible, and reflective of Wikipedia’s principles—not the most convenient version for you or anyone else.

Additional Best Practices for Editing Within Wikipedia’s Rules

Editing Wikipedia might feel like walking a tightrope at times—balancing factual accuracy, neutrality, and adherence to the platform’s intricate policies. Beyond understanding the core principles like verifiability, neutrality, and avoiding original research, there are practical techniques that can help you edit effectively while respecting Wikipedia’s guidelines. Let’s dive into a few key practices that can shape your contributions into something both impactful and rule-compliant.

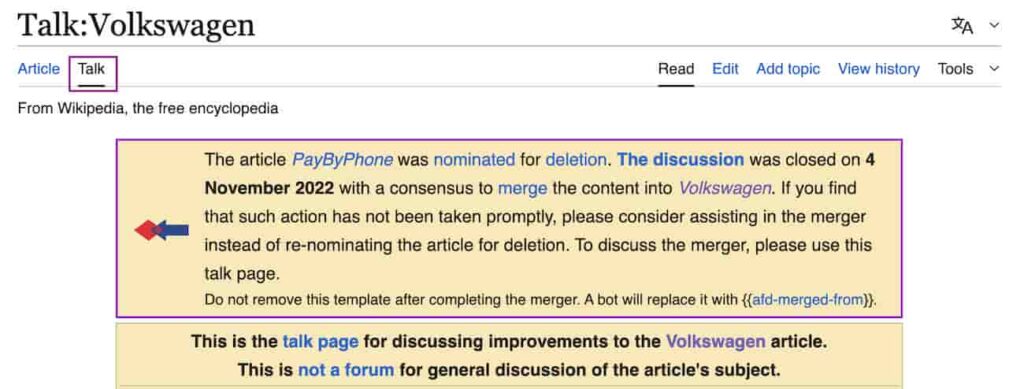

How to Navigate Wikipedia’s Talk Pages

If Wikipedia articles are a space where information is presented, the Talk Pages are the virtual workshop where contributors collaborate, debate, and fine-tune that information. These discussion pages, found by clicking the “Talk” tab at the top of any article, serve as a platform for editors to hash out disagreements, propose changes, and explain their reasoning.

When exploring Talk Pages, it’s crucial to approach them with a spirit of collaboration. For instance, if you have a suggested edit that might be contentious—like revising how a controversial topic is framed or adding data from a newer, lesser-known source—it’s wise to broach the subject on the Talk Page first. State your reasoning clearly, referencing Wikipedia’s policies where possible, such as citing WP:NPOV (Neutral Point of View) or WP:RS (Reliable Sources). Engaging this way not only builds consensus but also highlights your willingness to follow protocol.

Remember, every comment should be respectful and constructive. Heated debates happen, but successful editors rarely escalate them. Signature your contributions with four tildes (e.g., ~~~~), which auto-generates your username and timestamp, reinforcing transparency in your communication. And even if your proposal isn’t accepted immediately, consider the feedback as an opportunity to improve your understanding of Wikipedia’s norms.

Understanding Wikipedia’s Consensus Model

Wikipedia doesn’t operate on a simple majority-rules system; instead, it relies on consensus. This concept means that substantive changes to articles require general agreement among editors, even if that agreement isn’t unanimous. Consensus isn’t just about vote counts on Talk Pages; it’s about reasoning and adherence to Wikipedia’s principles.

Let’s say you want to update an article about a recent scientific breakthrough. Even if two or three editors oppose the edit, your argument might still prevail if it’s well-supported by reliable sources and aligns with Wikipedia’s guidelines. Conversely, even ten editors agreeing isn’t enough if the proposed content doesn’t meet standards like verifiability or neutrality.

A great way to foster consensus is by proactively engaging with other editors on Talk Pages, explaining your edits thoroughly in Edit Summaries, and being open to compromise. For example, if someone challenges the addition of a statistic because your source isn’t considered reliable under WP:RS, consider proposing an alternative, equally reputable source. Consensus is an ongoing process of give-and-take that rewards patience and diplomacy.

The Role of Edit Summaries and Revision Histories in Accountability

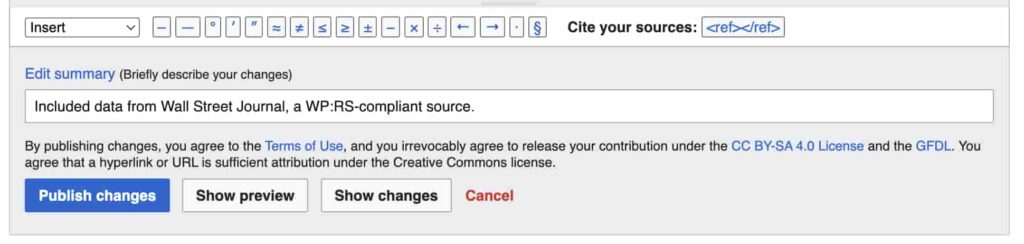

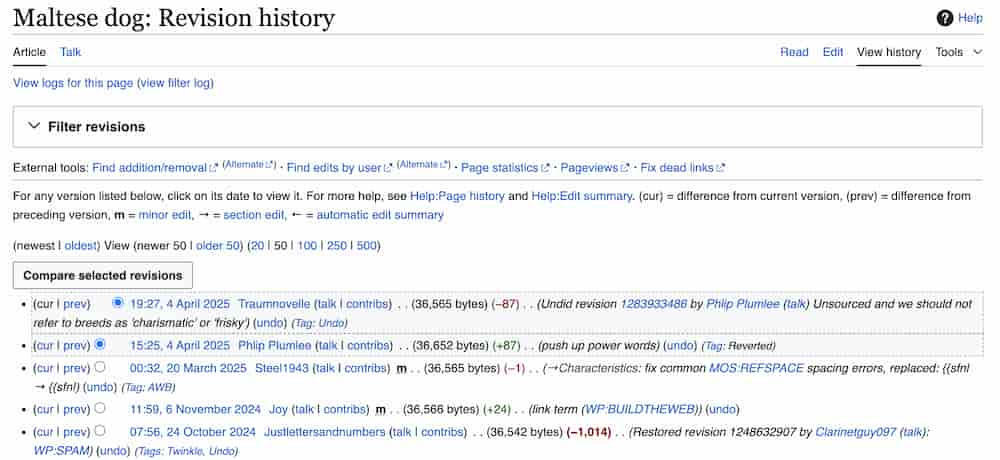

Every time you hit “Publish changes” on Wikipedia, you’ll notice a small box prompting you for an Edit Summary. Don’t skip this step—it’s more important than it might seem. A concise, clear summary of your edit not only keeps you accountable but also helps other editors quickly understand what changes have been made and why.

Think of your Edit Summary as a digital courtesy note to the rest of the Wikipedia community. For example, if you’re correcting a typo, a quick “Fixed typo” suffices. But for more substantive changes, go beyond the basics. If you’ve rephrased a section to improve neutrality, point it out: “Adjusted language for NPOV compliance on [topic].” If you’ve added new information, add a relevant note: “Included data from [Source Name], a WP:RS-compliant source.” This transparency builds trust and minimizes confusion or disputes later.

Coupled with Edit Summaries, Wikipedia’s revision history also ensures accountability. Any article’s “View history” tab allows you—and anyone else—to see a complete log of edits, including yours. This transparency underscores why edits must be thoughtful and compliant; every change, even reversals, is preserved for public scrutiny.

Combining Best Practices for Collaborative Success

When you piece all these practices together—constructive Talk Page usage, fostering consensus, and leaving thoughtful Edit Summaries—you’re not just playing by Wikipedia’s rules; you’re actively contributing to a culture of responsible, community-driven editing. Collaboration is the foundation of Wikipedia, and by aligning your behavior with its norms, you’ll likely find your contributions are not only accepted but respected.

Tools and Resources for Mastering Wikipedia Editing Rules

Navigating Wikipedia’s web of editing policies can feel like learning a new language—it’s intricate, nuanced, and requires a bit of practice to get right. But just like any skill, having the right tools and resources at your disposal makes all the difference. Whether you’re a newcomer trying to make your first contribution or a seasoned editor refining your technique, Wikipedia offers a surprising array of resources to guide you. Let’s take a closer look at some tools and communities that will empower you to align your edits with Wikipedia’s policies and maintain credibility in its collaborative environment.

Recommended Guidelines and Reference Materials

If you’ve ever felt a little overwhelmed by Wikipedia’s dense policy pages, you’re not alone—many editors admit it can feel like drinking from a fire hose. The good news is, Wikipedia provides streamlined versions of its policies that serve as excellent starting points. The “Editing Wikipedia” brochure, for instance, is specifically tailored for newcomers. It covers the essentials: neutrality, verifiability, and reliable sourcing, breaking these big ideas into digestible sections.

Another indispensable guide is the Wikipedia:Policies and Guidelines overview. While it’s more comprehensive, it’s organized in a way that allows you to zero in on specific topics, such as how to handle conflicting viewpoints or assess the reliability of a source. When in doubt, the “Cheatsheet” for Wikipedia editing provides a quick reference to markup codes and editing etiquette, so you’re not fumbling through your first few changes.

For more practical examples, the Wikipedia Tutorial offers a step-by-step walkthrough tailored to common scenarios, like managing citations or resolving disputes on articles. Combining these with Wikipedia’s portal for new editors gives you not just the “what” of editing policies, but the “how.”

Community Support: How to Seek Help from Experienced Editors

One of Wikipedia’s greatest strengths is its vibrant, collaborative community. There’s always someone available to help—not because they’re paid to, but because they genuinely care about the integrity of the platform.

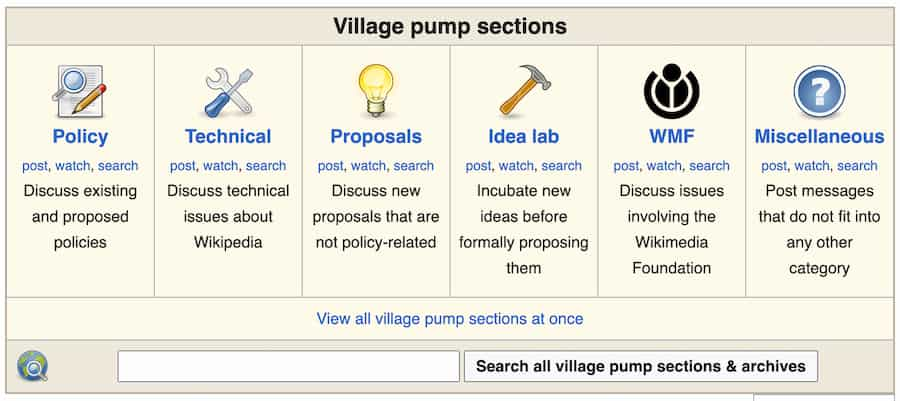

For more technical questions, Village Pump is another resource you’ll want to bookmark. It’s divided into sections—policy, technical, proposals—so you can post questions or review ongoing discussions that might solve your problem before you ask. And let’s not forget Talk Pages, often overlooked but incredibly useful. These are where editors hash out disagreements, propose changes, and document their rationale, giving you an insider view into how policy is applied in real-world edits.

Don’t feel obligated to go it alone. Wikipedia encourages healthy dialogue, and the site itself thrives on collaboration. If you encounter a tricky piece of content that makes neutrality or verifiability feel like a moving target, reaching out on the associated Talk Page can save hours of frustration. The Wikimedia Discord server and active Facebook editing groups are also worth exploring if you prefer real-time interaction or casual discussion.

Monitoring and Tracking Changes with Wikipedia Tools

One of the most valuable aspects of Wikipedia’s open format is how visible everything is. You can’t go wrong by tapping into tools that track article changes, give context to edits, and help you keep tabs on the entries you’re working on. Page histories, for instance, let you see how an article has evolved over time and identify the reasoning behind controversial decisions. By reviewing this, you can evaluate the precedent for what content “sticks” in a challenging article and adapt your approach accordingly.

For those worried about compliance with editing policies as they make changes, Huggle and Twinkle are standout tools for semi-automated editing. These software extensions help monitor vandalism, enforce neutrality, and clean up simple formatting mistakes, making adherence to policies more manageable, especially if you’re working on high-traffic pages.

Another must-have is the Cite tool, which is integrated directly into the editing interface. It simplifies the process of adding citations, ensuring your sources are formatted properly and aligned with Wikipedia’s reliability standards. Pair this with tools like RefToolbar, which offers extra citation templates, and you’ll avoid one of the most common roadblocks for new editors: inconsistent referencing.

For ongoing monitoring, WikiBlame can be a game-changer. It allows you to trace back specific revisions to the moment they were added, which is invaluable for spotting the origin of biased content or unsupported claims. Whether you’re defending an edit or ensuring your own additions stay within policy parameters, having this kind of transparency at your fingertips is a serious advantage.

Across these tools and resources, there’s a common thread: in Wikipedia, no one is editing in isolation. By engaging with the platform’s guidelines, tapping into its supportive community, and using specialized tools to navigate the intricacies of editing, you don’t just improve your own contributions—you help uphold the collaborative spirit that keeps Wikipedia thriving.

Conclusion

Mastering Wikipedia’s editing rules isn’t just about following policies or navigating a labyrinth of guidelines—it’s about respecting the integrity of a platform that serves as a cornerstone of global knowledge. Whether you’re managing a brand’s online presence or contributing out of personal passion, your edits have the potential to shape how the world learns, interprets, and remembers information. That’s a responsibility worth embracing with care.

As you move forward, think of your efforts not only as a contribution to Wikipedia but as an investment in trust. In an era where misinformation spreads faster than ever, maintaining credible, well-sourced content isn’t just a skill—it’s a service to everyone seeking clarity in a noisy digital landscape. The challenge is to let neutrality, verifiability, and ethical engagement guide your contributions. In doing so, you’re not just playing by the rules—you’re reinforcing the foundation of one of the world’s most trusted resources.

So, here’s your invitation: Don’t just approach editing as a task to check off. See it as a chance to become part of a broader conversation—one where your decisions can enrich public knowledge and set new standards for authenticity in the digital age. After all, Wikipedia is ultimately a reflection of its contributors. Make your reflection one worth looking up to.

Tags: Wikipedia.